weathertwin.xyz

Demo here!

I started this project after reading an article on Fox Weather, and another on Medium. The general premise of both articles was that different regions around the world, even those separated by vast distances, can have very similar climates.

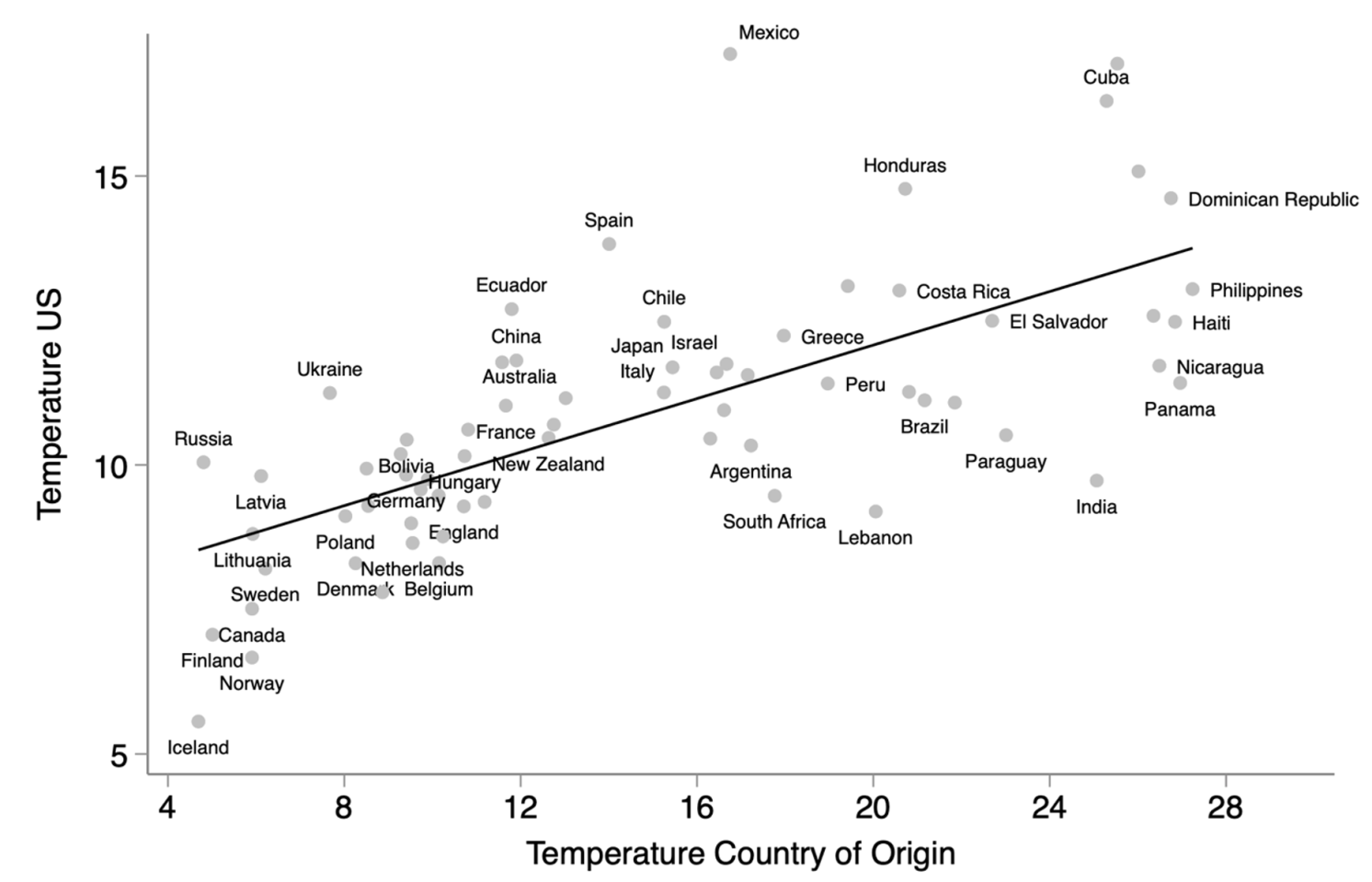

Research has shown that people who immigrated to the US in the past ended up in cities / places with similar climates to their home countries. Examples of this would include the populations of Scandinavian immigrants who settled in the north of the country in places like Minnesota, or Russians in Chicago.

These articles recirculated a month or two ago on Twitter (I wrote this months ago). A lot of the comments were things like "hey, I live in Dallas. Anyone know what like Middle Eastern city would be closest to it or something?" It got me thinking: how could someone make an automated way of getting these results? Ie. if I typed in "San Diego", it would probably give me some city on the Mediterranean coastline, or a city in the Middle East. I set out to make this app and see if I could get any interesting results.

Figure 1: Graph showing the correlation between the climate of immigrants' home countries and their settlement locations.

The Solution

My first hurdle was finding a method of finding / retrieving these results quickly. I settled on using some sort of vector database to query the climates of cities across the world against US cities. This meant getting a ton of climate data for different cities and then somehow extracting out some high-dimensionality embedding vectors that I could compare. The vector database I decided to use was Pinecone. I'd used it before in other RAG-type projects, and the developer experience is great. I highly recommend using it if you ever need a vector database.

Next I had to find my data source. There were a couple things I considered using, but the two top sources were the Global Historical Climatology Network (GHCNM), a government program that puts up free climate data, or OpenWeatherMap, a premium climate data source. OpenWeatherMap has a free tier, but it was too limited for my purposes. I knew I needed at least monthly temperature data for any city for an entire year, and that was a completely different price tier for the site. The thing is, OpenWeatherMap gives access to not just temp. data, but also precipitation, pressure, etc. which would all be useful for making a holistic "weather embeddings vector" for a city. Despite the benefits of OpenWeatherMap, I went with the free government site.

Getting the data

I downloaded 2 massive files from the site. One held precipitation data across various cities in the world, and the other held minimum and maximum temperatures. All of the data was given in monthly averages. The data was clearly meant to be read using their own software or something in R, so I had to parse it into a coherent CSV file using Python.

Unfortunately, I quickly realized that there were FAR more global cities in the temperature dataset than there were in the precipitation dataset, so I had to scrap that one and stick to just temperature (obviously I knew this would throw off the results).

After fixing this issue, I started cleaning / formatting the data. For this, I used Pandas. I fixed the formatting, cleaned up empty values and 0 values (basically they put 9999 for "negligible" values and 8888 for empties, so I just changed the 9999 to 0 and the 8888 to NaN). The dataset came with a list of cities and their corresponding IDs and country codes, which could be matched in the dataset with various data values. I merged the CSV with the cleaned data along with the IDs replacing the station codes. This left me with a large csv file where I could query based on country, city, latitude or longitude.

I forgot to mention that the dataset had data from every year back to 1970, but not all of the years had the same data density. I checked for how many unique row headers there were (ie. how many different cities) for each year, and found that the highest density of data existed for 2019. However, I ended up keeping all the data because I figured I could just average it (more on that later).

Making the embeddings

I next took this CSV file and loaded it into a Pandas dataframe for some manipulation. One of the things I needed was a good way of converting this massive amount of monthly data into some sort of vector. I decided to average all the min, max temperatures for each month across all years in the dataset. Ie. every January 1970 till 2019 was average into a January average column, etc. and any missing years were skipped. In the end, I was left with a 24 dimension vector for each city, in the following format:

{

"Station": {

"Jan_tmin": 0,

"Jan_tmax": 0,

"Feb_tmax": 0,

"Feb_tmin": 0,

"...": 0,

"Dec_tmin": 0

}

}

I normalized these values across all the possibilities into the range between 0 and 1 (better for cosine similarity queries). This was done using the minmax normal formula.

I then upserted the vectors into Pinecone. If you've never used Pinecone, it's a vector database that specializes in really fast vector queries, where you can query using an input vector and it'll use a distance calculation to find the closest vector in your dataset. This is really useful for applications like RAG (Retrieval Augmented Generation) where you can use the vector database to find the most relevant passages in a document for it to answer a question, etc. I used Pinecone because it's a hosted service, so I didn't have to worry about the underlying infrastructure.

My API call to Pinecone was structured differently for both groups (US and global cities), since the US cities needed to be queried based on latitude / longitude and the global cities would be queried based on weather vectors. Here's some code I wrote for construction these data structures, where the name of the city was the label and the latitude and longitude of the city were metadata:

vectors_global =

[

(

row["Station Name"],

[

row["Jan_tminavg"], row["Jan_tmaxavg"],

row["Feb_tminavg"], row["Feb_tmaxavg"],

row["Mar_tminavg"], row["Mar_tmaxavg"],

row["Apr_tminavg"], row["Apr_tmaxavg"],

row["May_tminavg"], row["May_tmaxavg"],

row["Jun_tminavg"], row["Jun_tmaxavg"],

row["Jul_tminavg"], row["Jul_tmaxavg"],

row["Aug_tminavg"], row["Aug_tmaxavg"],

row["Sep_tminavg"], row["Sep_tmaxavg"],

row["Oct_tminavg"], row["Oct_tmaxavg"],

row["Nov_tminavg"], row["Nov_tmaxavg"],

row["Dec_tminavg"], row["Dec_tmaxavg"]

],

{

"latitude": row["Latitude"],

"longitude": row["Longitude"]

}

) for index, row in df_rest.iterrows()

]

With the data now upserted into Pinecone, I could continue with making the actual frontend of the site.

The frontend

The frontend flows as follows:

- User inputs a city name in the search bar.

- The site sends a request to the main AWS Lambda function.

- The function uses OpenCage Geocoder to convert the entered city name into latitude and longitude coordinates.

- These coordinates are used to query the first Pinecone database in order to get the closest station to the entered city.

- The 24-dimensional vector stored as metadata for this station is then used to query the second database for the most similar global city.

- That city and its coordinates are returned to the frontend.

I made the frontend using Next.js. I wanted the page to be responsive to user input, so the React ecosystem seemed the best way to go. With this, Tailwind and shadcn, I was able to quickly sketch up an input box with a submit button for my app. I wanted the results to not only be col to look at but also to show the user something useful about the resulting city.

I decided to use Google's Maps API to highlight the city the user enters, the station in the US that was closest to that query city, and the city across the world that had a similar climate to the query city. That way, somebody could see if the cities had matching latitudes or other features, or even just see what region of the world had similarities to theirs.

Design-wise, there was nothing fancy. I just picked a color I thought looked good ('slate-950') and used other variants of it for other components in the app. I originally thought I could use three.js to render a globe or something but it seemed like a lot of work for this project. I may add it in the future (note from the future: I did not, in fact, implement this).

Issues

Once I got the front and backend talking, I quickly noticed some issues. There were some cities that REFUSED to match to the closest station. Instead, a seemingly random station would be returned from Pinecone, and that tended to skew results badly. I also noticed that many of the returned cities were in Turkey...not sure why that is (probably overrepresented in the dataset? idk). It could also have been that the temperature data alone was not enough to get an accurate response in my queries, so I would probably need to use precipitation and other data as well.

Metrics

As an aside, I should probably go over what my metrics were for this. There is a classification method used for climate called Köppen classification. This classification model groups regions of the world based on their climate characteristics, ie. the east part of Socal has a similar hot desert climate to most of the Middle East, etc. I knew that if my results didn't seem to match these results, I was nowhere near the right answer.

Saved

I was going to just leave this project be but then OpenWeatherMap contacted me, saying I could use the "student" discount for my project since I wasn't doing anything commercial. This was huge: not only would I have more access to cities across the globe, but also relevant climate data like temperature, precipitation, pressure etc. Their API allows you to grab historical data from the past year as part of the student program. Given a latitude and longitude, the API returns with the following JSON:

{

"message": "Count: 24",

"cod": "200",

"city_id": 4298960,

"calctime": 0.00297316,

"cnt": 24,

"list": [

{

"dt": 1578384000,

"main": {

"temp": 275.45,

"feels_like": 271.7,

"pressure": 1014,

"humidity": 74,

"temp_min": 274.26,

"temp_max": 276.48

},

"wind": {

"speed": 2.16,

"deg": 87

},

"clouds": {

"all": 90

},

"weather": [

{

"id": 501,

"main": "Rain",

"icon": "10n"

}

],

"rain": {

"1h": 0.9

}

},

...

]

},

....

This was the perfect amount of data I needed for the project. BTW, this wasn't just like monthly or something: they have this data for every hour since 1990. In other words I could have an entire year of hourly data for any city in the world.

I set about thinking of how many cities I realistically needed. After some thinking, I settled on 4000 global cities and 4000 US cities. I figured that would cover most possible places a user of mine might enter, and give enough of an assortment of results that it would actually be interesting to use.

The API allows you to pull 169 hours at a time per call, so I made a giant looping function to query the API and pull data / save it to json files. I then used cities.json to make a list of every city in the world. If you've never used it, it's just a giant JSON file containing rows with city name, country, lat / lng, and administrative section (ie. state or province). I pulled this into a dataframe and then sampled 10 cities at random for each country to get my 4000 global cities, and then 40 cities at random from each state for the US cities. Pulling this data took me almost a month of leaving the code running at work and at home after work, at least 12 hours a day. In the end, this left me with two massive CSV files.

After getting all that data downloaded, I sorted it so that each city would be grouped with its own rows of data. I then ran some analyses on it, because my download log showed that some cities had missing data. I wanted to find out for each group (global, US) what the majority of cities had in terms of number of rows, since this would signify how many hours of data each had. I also decided to train an autoencoder on the data and use that instead to make the embedding vector representations of each city. Why? For fun, but also because I thought it would give better results.

I got the following data for Global:

Most common number of rows and how many unique lat/lng pairs per count:

- 1st most common: 8281 rows in 3030 lat/lng pairs

- 2nd most common: 8112 rows in 744 lat/lng pairs

and then for the US:

Most common number of rows and how many unique lat/lng pairs per count:

- 1st most common: 8112 rows in 3620 lat/lng pairs

- 2nd most common: 7943 rows in 36 lat/lng pairs

- 3rd most common: 16224 rows in 7 lat/lng pairs

- 4th most common: 7098 rows in 3 lat/lng pairs

- 5th most common: 7774 rows in 2 lat/lng pairs

I think US had some doubling I had to go fix. That left the final usable number of cities as 3620 for the US and 3774 for global.

Final Update (Sept. 2024)

So...I started a new project. I'm hoping at some point I'll get the time to go back and re-run this site with the new data. For now, the project is still up and running with the original dataset. As discussed before, your mileage may vary!