hihat.ai

What is hihat.ai?

I started hihat.ai with my friend Travis (go check out his portfolio site) to solve a problem we both saw coming as musicians: what would people like us do if AI were to take over music production? Sure, people will probably always make art regardless of monetary incentive, but for a lot of musicians, music is the only way that they sustain themselves. Our idea was to allow musicians (we'll call them "contributors") to upload their sample packs to train custom music AI models. These models would then be distributed on a marketplace (if you're a musician, think of a marketplace like Splice), where other musicians, ie. "users", could use the models to generate new samples in the style of the contributor whose data was trained on. At the end of the month, every contributor would make money based on how often their model was used, similar to how Spotify pays out royalties.

The Stack

Our stack consisted of Next.js in the frontend and Node.js in the backend through AWS Lambda functions. For authentication, I went with AWS Cognito to keep all the infra similar, and the same reasoning applied to my choice of DynamoDB for the persistence layer. I used Tailwind for simple styling, and this coupled with NextUI allowed me to quickly develop the MVP.

For the backend, I ended up using the Serverless Framework to handle provisioning the AWS resources. It worked great, and I highly recommend it for anyone who doesn't want to deal with the AWS console experience. Serverless allows you to write your infrastructure and Lambda functions in a YAML file using Cloudformation syntax. Because the Lambda functions are provisioned in the same file as the resources, it is very simple to write event-based routines, such as a Lambda that automatically creates a "user" in a DynamoDB table when a new user is created in Cognito.

Frontend

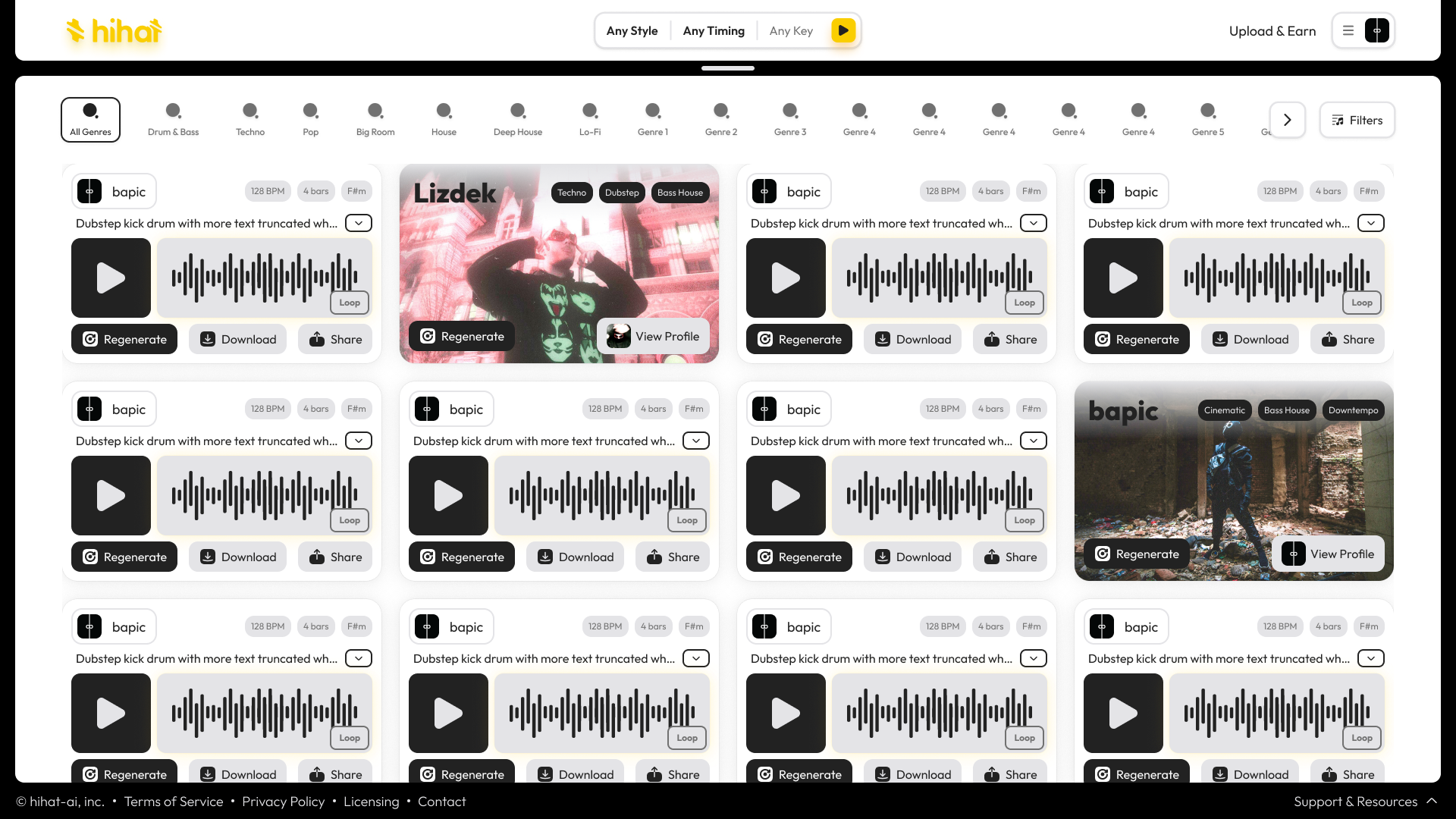

Now, luckily Travis is an excellent designer / UI guy so I didn't have to handle that side of the frontend. Instead, he made mockups in Figma and I just translated them to code. I don't remember exactly every UI/UX decision he made and why he made them, but a few notable ones I remember were ease of upload for artists and satisfying audio handling. Fig. 1 shows our main marketplace page when we launched.

Figure 1: Screenshot of hihat.ai interface showing various sample packs and a music player.

The first point was simple: have as little friction as possible in the audio upload / model creation process for artists. One of the ways we implemented this was by allowing drag and drop anywhere on screen for file upload, and easy to use text inputs for the model metadata. For the drag and drop functionality, I used react-dropzone.

The API for react-dropzone is easy to use. Its main interface is the "Dropzone" component which handles all the behavior you need. Here's some example code from their Github:

import React from 'react'

import Dropzone from 'react-dropzone'

<Dropzone onDrop={acceptedFiles =>

console.log(acceptedFiles)}>

{({getRootProps, getInputProps}) => (

<section>

<div {...getRootProps()}>

<input {...getInputProps()} />

<p>Drop here</p>

</div>

</section>

)}

</Dropzone>

Another important aspect of our UI was the audio player. For this, I used wavesurfer.js. This React audio player library lets you interact with the HTML5 and WebAudio audio player but with more custom behavior. We used this to customize the look of the actual waveform. One cool aspect of this library is that the audio attached to the player is analyzed on mount, so each sound will have a different waveform (as expected). In Fig. 2 you can see an example of our audio player with its waveform. The waveform was a part of our <SampleCard/> component, which held artist info and metadata as well as the audio itself.

Figure 2: Screenshot of hihat.ai SampleCard component showing custom waveform and audio player.

The majority of the audio player's functionality is set in a useEffect hook:

useEffect(() => {

if (waveRef.current) {

const ws = WaveSurfer.create({

"container": waveRef.current,

"height": 'auto',

"splitChannels": false,

"normalize": true,

"waveColor": 'rgba(0, 0, 0, 0.5)',

"progressColor": "#000000",

"cursorColor": "#FFFFFF",

"cursorWidth": 0,

"barWidth": 5,

"barGap": 5,

"barRadius": 30,

"barHeight": null,

"barAlign": "",

"minPxPerSec": 1,

"fillParent": true,

"url": audio_url,

"mediaControls": false,

"autoplay": false,

"interact": false,

"hideScrollbar": true,

"audioRate": 1,

"autoScroll": true,

"autoCenter": true,

"sampleRate": 8000

});

// Load the audio file

ws.load(audio_url);

setWaveSurfer(ws)

ws.on('finish', () => {

setIsPlaying(false);

ws.seekTo(0);

});

}

}, [audio_url]);

Backend

As explained before, I used the Serverless framework to build out the backend for hihat. The functionality started off as fairly standard CRUD routes ie. create a user, update a user, create a model, etc. Here's an example of our serverless.yml to give an idea of how provisioning resources works:

# an example function

create-new-user:

handler: src/users.CreateNewUser

events:

- http:

path: /users

method: post

cors:

origins:

- 'SOME_ORIGIN'

- 'PROBABLY_LOCALHOST'

headers:

- Content-Type

- X-Amz-Date

- Authorization

- X-Api-Key

- X-Amz-Security-Token

- X-Amz-User-Agent

...

# an example resource

UsersTable:

Type: AWS::DynamoDB::Table

DeletionPolicy: Retain

Properties:

TableName: users-table-dev

AttributeDefinitions:

- AttributeName: email

AttributeType: S

- AttributeName: userID

AttributeType: S

KeySchema:

- AttributeName: userID

KeyType: HASH

GlobalSecondaryIndexes:

- IndexName: email-index

KeySchema:

- AttributeName: email

KeyType: HASH

Projection:

ProjectionType: ALL

BillingMode: PAY_PER_REQUEST

...

One issue I ran into while developing the backend had to do with resources provisioned outside of Serverless. I had made our Cognito user pool for production in the AWS console (bad idea) before realizing I could do it with Serverless, so the pool was not handled like the rest of our resources. I couldn't delete the pool after switching fully into Serverless because by then we had already gathered users who had signed up during our "demo day" at Buildspace S3.

Demo day

As an aside, I should go over how I dealt with the demo day user signup. At the time, we didn't have much of a product to show (it was more or less a single text box that could randomly generate with one model) so Travis wanted to incentivize signups so we could blast emails out when we did end up launching. He created stickers with QR codes (during that QR code / Stable Diffusion generator craze) which took users to a page on our site allowing them to signup as an "SF User". The thought was we would give people special badges and perks for having signed up with us :before: launch.

On my end, I created a new DynamoDB table that held the userId information with their emails, etc. In the main user table, I had a boolean attribute for "isSf". Unfortunately, we never got around to making any special perks.

Fixing the Cognito issue

Back in Cognito, I resolved the issue by specifying the ARN of the user pool as an environment variable in the yml file. Actually, another reason why using Serverless was seamless was that I could pass stage specific environtment variables to the Lambda functions without much effort. For example, I would set the resource names (ie. table, bucket) to be affixed with the stage name. This way I could adhere to having globally unique resource names as required by AWS and also could fully separate my resources between dev and prod stages.

Other issues

A lot of the issues I ran into were in the long development cycles inherent in writing serverless code. Unfortunately, every time I would run serverless deploy --stage dev, it would take a couple minutes for the code to deploy and be available on the API route. Because of this, every stupid little bug took forever to root out. This is part of why I no longer use serverless functions to build out new backends. Nowadays, I prefer to stick to a server I can easily compile and containerize to deploy anywhere, such as Spring Boot.